Hadoop Training in Chennai

Hadoop Training in Chennai, offered by FITA Academy, will help you learn the professional skills of consuming, storing and analysing massive volume datasets. Big data plays a vital role in finding smart solutions in today’s agriculture, medicine, meteorology, marketing and cybersecurity. Thus, joining FITA Academy’s Big Data Training in Chennai is crucial to increase your job opportunities in top companies. We provide an excellent opportunity to work on a real-time industry project to gain practical experience in the Hadoop framework and ecosystem.

(6549 Ratings)

Course Highlights

- FITA Academy’s Big Data Hadoop Training in Chennai is devised and led by real-world industry experts with 6+years of experience.

- We provide our courses in small groups to encourage interaction and give you the most individualised learning experience possible.

- For the benefit of students and working professionals, FITA Academy offers fast-track shift batches in addition to regular shifts on weekdays and weekends.

- A total of 1500+ placement partnerships are made between FITA Academy and small to large-scale companies to provide our students with guaranteed placement support.

- Regular tasks are given to students to help them develop their skills. Assessments are also available to assist students in evaluating their progress at each chapter’s conclusion.

- The training sessions at FITA Academy are created to match your preferred learning method. You can choose between offline classes and live instructor-led online classes.

Quick Enquiry

Course Description

The Big Data Course in Chennai starts from the basics to lay a strong foundation in the subject. It gives a complete basic understanding of Apache Hadoop and MapReduce frameworks. The module involves the types of Data generation, HDFS core concepts, flow architecture and data compression techniques. The MapReduce is taught using the Java program with input and output formats.

The course further moves on Apache Hive, Pig and Hbase. It sheds light on its DML/DDL commands explanation, script execution in shell, and CAP theorem concepts. The final module includes Apache Sqoop, Flume and Zookeeper. Various sqoop concepts, flume features, Hue architecture flow, Zookeeper principles, Hadoop admin commands, balancer concepts and various other concepts are exposed to students.

Get trained by Industry Experts via

Instructor-led Live Online or Classroom Training

with 100% Placement Support

Hadoop Certification in Chennai

Hadoop Certification Course in Chennai provides students with resourceful certificates that are approved by FITA Academy. The certification accounts for the knowledge of the Hadoop framework and ecosystem in Big Data to effectively analyse large volume datasets. Pursuing such a highly knowledgeable certification at one of the renowned training institutes, such as FITA Academy, will give the candidate a competitive edge over the other competitors in almost all the interviews.

The excellent training and practical framework knowledge that FITA Academy guarantees will continually increase the standard. Our certification is a credential that attests to the candidate’s commitment to learning new things and becoming an authority on the subject. FITA Academy ensures the Hadoop certification by offering the greatest learning of skills, knowledge, and practical capabilities to sustain industrial competitiveness.

Industory Expert Trainers

LIVE Project

1,500+ Hiring Partners

Affordable Fees

Hadoop Job Opportunities

According to recent research by Statistica, the size of the Hadoop and Big Data market worldwide has increased by 75% in the past 5 years and is expected to increase further in the future. This tremendous increase results in numerous job opportunities in the IT industry.

Hadoop and Big Data professionals are the most in-demand IT professions from small to large-scale companies. Hadoop Developer, Hadoop Administrator, Hadoop Architect, Hadoop Tester, Big Data Engineer, Big Data Architect, and Machine Learning Engineer are the various job positions offered to individuals with the certificate of Big Data Training in Chennai at FITA Academy.

Based on the data collected from Glassdoor and Ambition Box, the approximate Big Data Engineer Salary for freshers ranges from 3.0 to 4.5 LPA. You can increase the package with the expertise gained through your experience in the field.

Our Alumni Works At

Hadoop Training in Chennai Frequently Asked Questions

Students at FITA Academy will learn the Hadoop framework and ecosystems to analyse large volume datasets by working on a real-time industry project under expert guidance. We provide top-notch training through our trainers with 6+ years of experience in this field. Above all, our Hadoop certification has lifetime validity.

Request a callback from our admission staff to enrol in the Big Data Training in Chennai or visit any of the FITA Academy branches.

Students and working people can select their favourite teaching method at FITA Academy. We offer people the option to increase their education and improve their job profiles through live instructor-led online classes from the comfort of their homes. Additionally, trained instructors provide in-person offline lessons at our branches regularly.

The FITA Academy has formed 1500+ placement alliances with small to large-scale IT companies. Through this, we offer guaranteed placement support to safeguard the future of our students who have successfully completed their courses.

We created FITA Academy in 2012 to provide high-quality, affordable IT training. More than 50,000 students have taken our training so far, and many of them are currently working for the most well-known IT companies. We aspire to give 1 million students the skills they need to pursue the IT careers of their dreams through our training programmes.

Get trained by Industry Experts via

Instructor-led Live Online or Classroom Training

with 100% Placement Support

Additional Information of Hadoop Training in Chennai

Big Data and Hadoop are the booming sectors of today’s IT industry. So start enrolling in the Big Data Hadoop Training in Chennai without delay to increase your job opportunities.

What is Hadoop?

Hadoop is an open-source technology for running software clusters with applications and storing enormous volumes of data. Due to our extensive data storage, powerful processing, and computational capabilities, we can handle an endless number of operations or jobs. Its major purpose is to encourage the development of big data technologies that support advanced analytics like predictive analytics, machine learning (ML), and data mining.

Demand for Hadoop

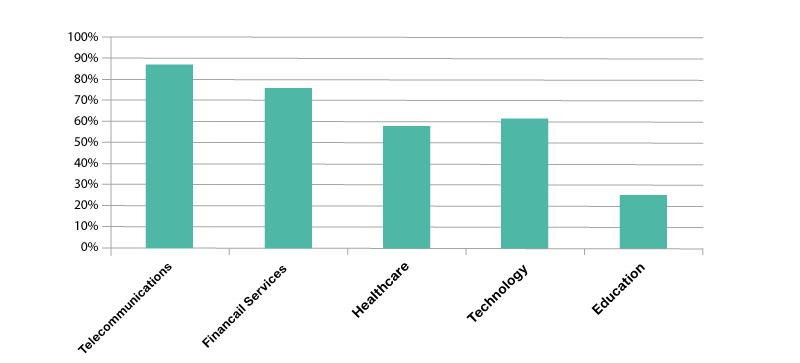

Below is a bar graph based on the results of recent research by Forbes on demand for Big Data in various industries.

The requirement for systems that were virtually faultless in their operation evolved as the size of the global web expanded and the amount of information that needed to be processed increased, which is now known as Big Data. A lot of data needed to be processed, parsed, saved, and retrieved, but hardware systems that could handle this volume of data hadn’t yet been developed. In any case, one system wouldn’t have been adequate to hold the various types of data that the globe was consistently producing.

Key Features of Hadoop

Open Source

Hadoop is an open-source, meaning that anyone can access its source code. According to the needs of our business, we can adjust the source code. Hadoop is even accessible in proprietary forms from companies like Cloudera and Hortonworks.

Scalable

Hadoop utilises a machine cluster, and it is highly scalable. We may expand our cluster’s size without downtime by adding new nodes as needed. Horizontal scaling is adding new machines to the cluster in this way, whereas vertical scaling is increasing hardware, such as by doubling the hard drive and RAM.

Speed

Hadoop framework uses HDFS (Hadoop Distributed File System) to manage its storage. A huge file is divided into smaller file blocks and distributed among the Hadoop cluster’s nodes using the Distributed File System (DFS). Because so many file blocks are handled in parallel, Hadoop is faster than traditional database management systems and offers higher performance. Speed is crucial when working with massive amounts of unstructured data, and Hadoop makes it simple to retrieve TBs of data in only a few minutes.

Fault-Tolerant

The most important aspect of Hadoop is fault tolerance. Every block in HDFS has a replication factor of 3 by default. HDFS makes two additional copies of each data block and stores them in various parts of the cluster. We have two more copies of the same block in case one is lost due to a malfunctioning machine. Hadoop achieves fault tolerance in this way.

Schema Independent

Hadoop can handle a variety of data formats. It is adaptable enough to store data in various formats and can process both organised and unstructured data.

Low Latency Rate

Throughput is the quantity of work completed in a given length of time, while low latency is the processing of data with little to no delay. Processing is carried out concurrently on each block of data and independently of one another because Hadoop is founded on distributed storage and parallel processing principles. Additionally, code is transferred to data in the cluster rather than the data itself. Low Latency and High throughput are facilitated by these two.

Performance

Data is processed sequentially in legacy systems like RDBMS. However, Hadoop starts processing all the blocks simultaneously, enabling parallel processing. The performance of Hadoop is significantly higher than that of Legacy systems like RDBMS, owing to parallel processing techniques.

Data Locality

Moving the code, not the data, is the guiding idea of Hadoop. Data Locality in Hadoop refers to the method through which tasks, rather than code, are moved to the data to handle it. Data localization guarantees that data movement in the cluster is kept to a minimum because moving data across networks becomes challenging and expensive as we deal with data at the petabyte level.

Share Nothing Architecture

The Hadoop cluster’s nodes are independent of one another. This architecture, known as Share Nothing Architecture, prevents them from sharing resources or storage. As each node functions independently, there is no single point of failure in the cluster. Therefore if one node fails, the cluster as a whole won’t be affected.

Cost Effective

Unlike traditional relational databases, which need expensive hardware and high-end CPUs to handle Big Data, Hadoop is open-source and uses affordable commodity technology, providing a cost-efficient solution. Because holding a large number of data in typical relational databases is not cost-effective, businesses have begun to delete raw data, which may not be in the best interests of their operations. Hadoop offers us two key cost-saving advantages – first, it is open-source, which means it is free to use; second, it leverages cheap commodity hardware.

Compatibility

The storage layer of Hadoop is called HDFS, while the processing engine is called Map Reduce. There isn’t a hard-and-fast requirement, though, that Map Reduce must be the default Processing Engine. HDFS is used as a storage system by new processing frameworks like Apache Spark and Apache Flink. Based on our needs, we can switch the execution engine in Hive to either Apache Tez or Apache Spark. The storage layer of Apache HBase is a NoSQL columnar database that leverages HDFS.

Multiple Languages

Hadoop offers extended support for languages like Python, Ruby, Perl, and Groovy despite being primarily built in Java.

Enrol on the Big Data Hadoop Training in Chennai at FITA Academy to gain a comprehensive knowledge of the Hadoop framework and ecosystem for effective integration and analysis of huge volume datasets. Through this course, we ultimately help you unlock the doors of numerous job opportunities worldwide.

FITA Academy Branches

Chennai

Bangalore

Coimbatore